Introduction to Avatar Kinect by Microsoft.

Avatar Kinect is a new social entertainment experience on Xbox LIVE bringing your avatar to life! Control your avatar’s movements and expressions with the power of Avatar Kinect. When you smile, frown, nod, and speak, your avatar will do the same.

Ah, new developments on the Kinect front, the premier platform for Vision based human action recognition if we were to judge by frequency of geeky news stories. For a while we have been seeing various gesture recognition ‘hacks’ (such as here). In a way, you could call all interaction people have with their Xbox games using a Kinect gesture recognition. After all, they communicate their intentions to the machine through their actions.

What is new about Avatar Kinect? Well, the technology appears to pay specific attention to facial movements, and possibly to specific facial gestures such as raising your eye brows, smiling, etc. The subsequent display of your facial movements on the face of your avatar is also a new kind of application for Kinect.

The Tech Behind Avatar Kinect

So, to what extent can smiles, frowns, nods and such expressions be recognized by a system like Kinect? Well, judging from the demo movies, the movements appear to have to be quite big, even exaggerated, to be handled correctly. The speakers all use exaggerated expressions, in my opinion. This limitation of the technology would certainly not be surprising because typical facial expressions consist of small (combinations of) movements. With the current state of the art in tracking and learning to recognize gestures making the right distinctions while ignoring unimportant variation is still a big challenge in any kind of gesture recognition. For facial gestures this is probably especially true given the subtlety of the movements.

A playlist with Avatar Kinect videos.

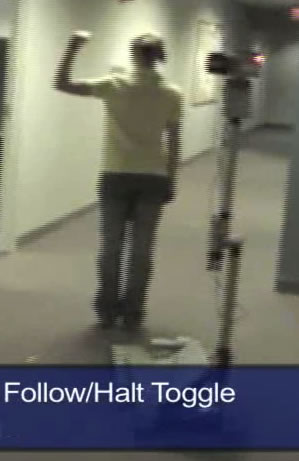

So, what is to be expected of Avatar Kinect. Well, first of all, a lot of exaggerating demonstrators, who make a point of gesturing big and smiling big. Second, the introduction of Second Life styled gesture routines for the avatar, just to spice up your avatars behaviour (compare here and here). That would be logical. I think there is already a few in the demo movies, like the guy waving the giant hand in a cheer and doing a little dance.

Will this be a winning new feature of the Kinect? I am inclined to think it will not be, but perhaps this stuff can be combined with social media features into some new hype. Who knows nowadays?

In any case it is nice to see the Kinect giving a new impulse to gesture and face recognition, simply by showcasing what can already be done and by doing it in a good way.